Data Management for AI: Enabling Responsible and Effective AI Systems

In the era of artificial intelligence (AI), the success of AI solutions is deeply intertwined with effective data management and the quantity and quality of available data. This blog post explores the critical role of data management in enabling responsible and effective AI systems, highlighting its relevance to all users and developers of various AI solutions.

We all manage data in our daily work. By understanding and implementing robust data governance practices, we can ensure the quality, security, and transparency of our AI initiatives, as well as the alignment with the EU AI Act. This knowledge is essential for fostering innovation and maintaining competitive edge in the rapidly evolving AI landscape, not only for the success of our own endeavours, but even more importantly for creating value and success for the society at large.

Given the inseparable relationship between data and computing, CSC as a supercomputing center considers data governance and data infrastructure as key focus areas. Also, the LUMI AI Factory has a strong emphasis on data reachability and Trustworthy AI, underscoring the need for streamlined access to various kinds of data, secure environments for data processing, transparency, accountability, privacy, and data governance.

Key provisions for data in the AI Act

The EU AI Act has specific provisions regarding data, especially for general-purpose AI models and high-risk AI systems. The distinction between an AI model and an AI system is crucial for understanding the scope and application of the regulation: In essence, an AI system is a complete application that uses one or more AI models to perform the tasks needed for the system’s functionality. AI systems categorized as high risk must be developed using high-quality data sets, proper data governance and management practices, bias detection and mitigation measures, and in compliance with GDPR. The providers of general-purpose AI models must provide technical documentation and instructions, comply with the Copyright Directive, and publish a summary about the content used for model training. Thus, AI Act requires AI developers ensure their data management practices are in good shape.

Open source AI requires sound data management practices

It is nowadays well understood that open-source software offers numerous benefits, including significant cost savings, increased collaboration, and accelerated innovation. Similarly, there is a strong push for open source AI to provide users and developers of AI with similar advantages. Beyond merely using and sharing of AI models and systems, open source AI encompasses the freedom to study and modify them. To achieve this, sufficiently detailed information about the data used to train the AI models is essential so that substantially equivalent systems can in actuality be built. All publicly available training data should be listed, along with instructions on how to obtain it.

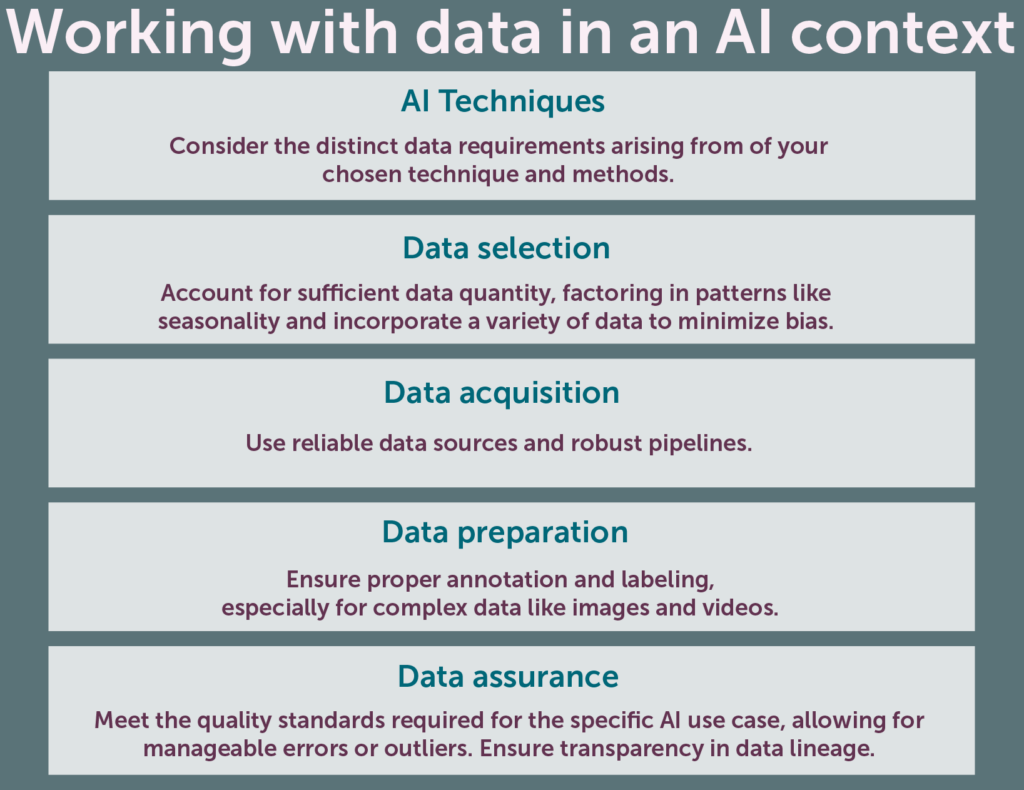

Integrating data management and operational practices with MLOps

MLOps, or Machine Learning Operations, is a set of practices that integrates machine learning, DevOps, and data engineering to deploy and maintain ML models. The goal is to enhance the efficiency, scalability, and reliability of ML systems in production environments. Data management is a crucial component of an MLOps workflow, encompassing everything from data ingestion and pre-processing to model deployment and quality monitoring. Every ML model needs to be trained and tested with appropriate datasets. For this, the datasets should be well-organized and versioned, i.e. there should be a process for tracking and managing changes to datasets over time.

CSC promotes responsible AI

CSC aims to be a pacesetter for responsible AI, and as part of this effort we are working on enabling thorough assessment of data as well as the processes and tools used to develop and use AI in our environments. This encompasses extensive and integrated data management and automated metadata capture that supports our users in risk management and quality control. Rich metadata and documentation help users to evaluate the fitness-for-purpose of datasets and tools. Data and system documentation should be user-friendly and enable responsible use of AI. CSC’s services should nudge our users towards FAIR and transparent practices.

According to Gartner AI-ready data is well managed and suitable for the specific use case [1]. All patterns, bias, errors and outliers in the data must be considered. The representativeness and size of the dataset have to be assessed and addressed if necessary. CSC aims at providing tools available for this vital assessment. First and foremost this requires extensive metadata and regarding business data, good data governance, e.g. clear data ownership and acknowledged stewardship.

In AI systems data also has to be versioned, quality controlled and data pipelines monitored and trusted. This means good data management practices have to be in place. One key point in responsible AI, for our customers as well as for other users of CSC’s services, is the transparency and documentation of data lineage [2]. Not only the original source, i.e. provenance, has to be well documented, but also all events during the data lifecycle. Cleaning and pre-processing of data should be documented. This means a considerable and sometimes almost impossible workload for the users, unless automated metadata capture and code management are applied. Fortunately, event logging, code management and metadata capture have all been identified as necessary service components in our future plans.

As the meme goes, FAIR data is FAIRly AI ready, and truly integrating data management with computing will enable CSC to be both responsible and strongly impact generating for the benefit of society and our customers and their users.

[1] Rita Sallam: “What Is AI-Ready Data? And How to Get Yours There.” Gartner, 2024. https://www.gartner.com/en/articles/ai-ready-data

[2] Bart Willemsen, Bernard Woo, Joerg Fritsch, Whit Andrews: “Predicts 2025: Privacy in the Age of AI and the Dawn of Quantum.” Gartner, 2025.

Authors: Aleksi Kallio, Jessica Parland-von Essen, Markus Koskela